The Hunyuan Video AI model is a cutting-edge text-to-video generation tool developed by Tencent’s Hunyuan AI team. Designed to transform textual prompts into high-quality video content, it offers a range of quantized versions to cater to various computational needs.

Quantized Versions Available:

The model is available in multiple quantization levels, each balancing performance and resource requirements:

- Q3 (3-bit): Models like Q3_K_S and Q3_K_M offer reduced precision, leading to faster inference times and lower memory usage, suitable for environments with limited computational resources.

- Q4 (4-bit): Versions such as Q4_K_S, Q4_0, Q4_1, and Q4_K_M provide a middle ground between performance and quality, making them versatile for various applications.

- Q5 (5-bit): Models like Q5_K_S, Q5_0, Q5_1, and Q5_K_M offer higher precision, resulting in improved video quality at the cost of increased computational demands.

- Q6 (6-bit): The Q6_K model provides even higher precision, suitable for applications where video quality is paramount.

- Q8 (8-bit): The Q8_0 model offers near full-precision performance, delivering the highest video quality among the quantized versions.

- BF16 (16-bit): The BF16 model maintains full precision, ensuring the best possible video quality, ideal for high-end computational environments.

Setup and Usage:

To utilize the Hunyuan Video AI model, follow these steps:

- Download the Model Files:

- Choose the quantized model version that best fits your needs from the Hugging Face repository.

- Place the downloaded

.gguffile into the./ComfyUI/models/unetdirectory.

- Additional Components:

- Download

clip_l.safetensors(246MB) and place it in./ComfyUI/models/text_encoders. - Download

llava_llama3_fp8_scaled.safetensors(9.09GB) and place it in./ComfyUI/models/text_encoders. - Download

hunyuan_video_vae_bf16.safetensors(493MB) and place it in./ComfyUI/models/vae.

- Download

- Running the Model:

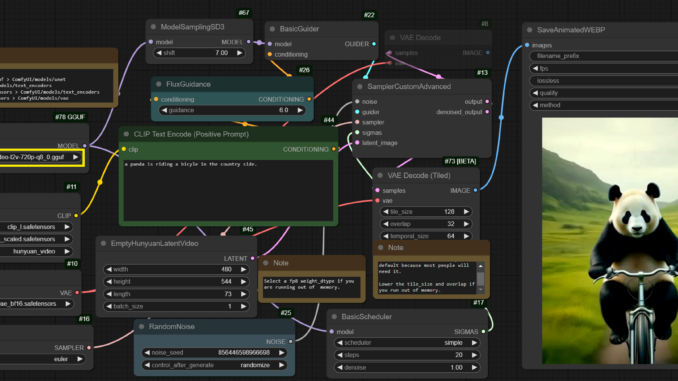

- Load the provided workflow workflow-hunyuan-gguf.json file into your ComfyUI canvas.

- Click on the Manager and install any missing nodes. If you are not sure how to do this, check my other ComfyUI articles.

- Restart ComfyUI after the installation of the missing nodes.

- Change the model according to the one you downloaded and you are ready to generate.

Examples:

Prompt: a panda is riding a bicyle in the country side.

Prompt: a beautiful busty asian female in a red dress walking down a busy street sidewalk. she is slender and thin. She is blinking naturally and smiling.

Prompt: a beautiful busty asian female in a black mini dress walking down a busy shopping mall. she is slender and thin. She is blinking naturally and smiling.

I am using a 4090 laptop gpu with 16GB of VRAM. The resolution is 400 x 544, 73 frames. It took about 4:30 to generate one video. The VRAM usage is about 15GB.

Community and Support:

The Hunyuan Video AI model has garnered attention within the AI community, leading to discussions and further developments:

- Users have explored accelerated versions like FastHunyuan, which samples high-quality videos with significantly fewer diffusion steps, resulting in up to an 8x speed increase compared to the original model.

- Community discussions and support can be found on the Hugging Face forums, where users share experiences, troubleshooting tips, and enhancements.

By leveraging the Hunyuan Video AI model, creators can efficiently generate dynamic video content from textual descriptions, opening new avenues in content creation and AI-driven media generation.

This post may contain affiliated links. When you click on the link and purchase a product, we receive a small commision to keep us running. Thanks.

Leave a Reply